WCF - Large Data Transfer - Best Practices

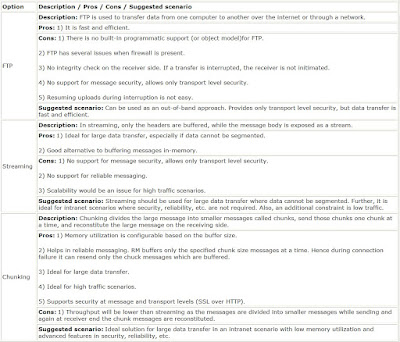

Above image lists the viable options for Large Data Transfer with their pros and cons and possible usage scenario.

Options mapped to scenarios:

1) Intranet scenario:

Context:

Assume the application is a line-of-business Windows based thick client. It is deployed within the organization intranet and interoperability is low priority.

Further, assume that the application consumes some intranet services which handle medium to high payloads (several MB’s to few GB’s). For example, the application might consume an in-built WCF service for uploading large data to a central database. Last assumption is that the payload data is saved in the database as a single entity (as an image).

Recommendation:

FTP is not preferred since it is an out-of-band approach.

Chunking is not applicable since the complete data for the service has to be buffered on the server side and stored into the image column of the database. If database had stored the data as chunks or if it was persisted in a file system, chunking could have been leveraged. Also, since the service is intranet scoped, chunking does not add much value.

Streaming over TCP is the best option for an intranet application especially when the payload data cannot be segmented.

Design considerations:

• In request data contract, use input type as Stream to support streaming for any type (File, Network, and Memory).

• Use the binding as NetTcpBinding which is optimum for intranet, but not interoperable.

• Use message encoding to Binary, since interoperability is not required for intranet scenario.

• Use host type as Console application since TCP service cannot be hosted in IIS.

• On the client end, before consuming the service, check if service is started since TCP service host is not self starting like IIS.

Advantages of Streaming over TCP:

• NetTcpBinding is optimized and for WCF-to-WCF communication; is the fastest mode of data transfer.

• Performance wise, streaming is much better than buffering / chunking.

• For servers, streaming is highly advantageous since it prevents the server from loading individual instances of a file in memory for concurrent requests, something that could quickly lead to memory exceptions, preventing any requests from being serviced.

• At the client, this is helpful when files are very large since they may not have enough memory to buffer the entire file.

Disadvantages of Streaming over TCP:

• No support for interoperability.

• No support for message level security. Supports only transport level security.

• No support for reliable messaging. Since TCP is a stateful protocol, handling connection drops is considerably difficult and one might have to stream the complete data all over again.

• Scalability is an issue for stateful protocols like TCP. If N clients connect to the server, there would be N open connections which might result in a server crash.

2) Internet scenario – High payloads:

Context:

Consider a typical ASP.NET web application which needs to consume internet based services involving medium to high payloads (several MB’s to few GB’s). For example, the web application would want to upload considerably large files to a central server.

Recommendation:

FTP and Streaming are ruled out since FTP is out-of-band and streaming is not scalable in an internet scenario.

A possible option is to use the Chunking Channel (MS community code), which is a custom channel extended from the built-in channels offered by WCF. In this feature, data travels over the network in chunks (or pieces) and the complexity is handled within the channel / binding instead of the client or the server. It might be useful for trivial scenarios. However, there is a significant learning curve required to understand and adopt these custom channels. Another drawback is that advanced features like reliability (connection drop), durability, etc. are not supported out-of-box. So Chunking Channels are not a suitable option.

One more option is to use WCF Durable Services introduced in WCF 3.5. This feature is a mechanism of developing WCF services with reliability and persistence support. It is a good candidate for long running processes and the construction is easy due to use of attributes model. However, since the process context is dehydrated and rehydrated from the storage for each call, there is a significant performance overhead (benchmark results suggest upto 35%).

The best approach is to implement a chunking channel at the application level (over Http). In other words, the chunking is handled by the application (client and server) and not the network. This would keep the construction and the resulting APIs simple, while offering features like reliability (handling connection drop), durability, etc.

Implementation:

The application level chunking service can be used for upload / download of large data between client and server. Chunking works only when the client and the server are aware of the chunking mechanism and communicate appropriately. Some common parameters could be chunk size, number of chunks, etc.

Also, two potential storage providers namely SQL Server and File System are could be configured. Note that for SQL Server provider, the data should be stored as chunks (or separate records in a table) whereas the File System provider means that the storage area is just a Windows folder. Uploading and downloading data boils down to copying chunks of data across Windows folders in disparate systems.

Design considerations:

• For internet scenario, the service could be configured WSHttpBinding or BasicHttpBinding. If session is to supported, use the corresponding Context Binding.

• Use message encoding as Text for interoperability or MTOM for optimized transfer of binary data.

• Each end-point (based on binding) should be deployed in a separate service host.

• The data access providers (SQL Server and File System) could implement a common interface (Provider model). The service implementation layer would instantiate the appropriate interface based on configuration settings. This would ensure loose coupling and also provide extensibility.

• For SQL Server provider, a file upload means storing the file metadata and its chunks as records in tables. The file metadata could be stored in a master table and its chunks could go into a child table. Consequently, a file download involves reading the table contents and reconstructing the file on the client.

• For either provider, it is important to handle upload requests for files having the same names. A simple solution is to append GUID’s to get unique names. Other implementations might be needed based on the scenario.

• Another good practice is to use a InitializeUpload() or InitializeDownload() method to initialize the data transfer before the actual method call. These initialization methods could establish the chunk size, number of chunks, status of server, etc. so that the client and server are in synchronization.

• For testing purposes, it is useful to call the service methods asynchronously (using Callback methods) so that the status of the transfer (and each chunk) can be displayed to the client. However, for performance testing purposes do not use the asynchronous call mechanism.

References:

• MSDN's Chunking Channel sample with source code.

• Yasser Shohoud's high-level analysis on moving large data.

• Mike Taulty's insightful screencast on Durable Services.

• Jesus Rodriguez's write-up on Durable Services with code snippets.

Comments