Why Test Nomenclature matters for Engineering Leaders

Introduction

As leaders overseeing engineering teams, one of your core responsibilities is ensuring that development processes are efficient, scalable, and aligned with business goals. Testing, though often seen as a technical task, plays a pivotal role in these objectives. Misalignment in testing practices and unclear definitions of testing terms can result in inefficiencies, delayed releases, and a loss of trust within teams.

In this post, we’ll explore why creating a shared vocabulary around testing nomenclature is not just a tactical necessity, but a strategic investment that affects your organization’s ability to deliver fast, reliable software.

What’s in a name?

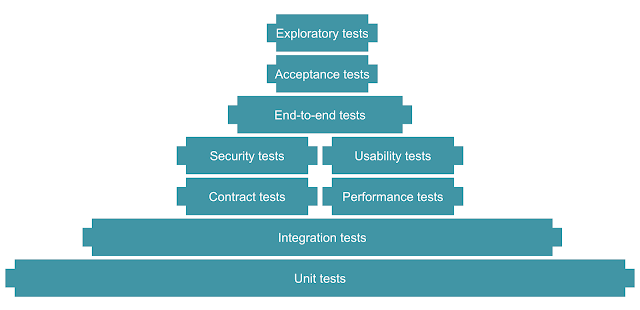

A quick online search will show just how many terms exist for different testing types or tactics – end-to-end tests, regression tests, contract tests, smoke tests, and more. Without context and consistency, the implementation and purpose of these tests are left to interpretation.

For engineering leaders, the impact of these inconsistencies is significant. Misunderstandings about testing types can result in duplicate work, misaligned priorities, and increased technical debt. Confusion among teams leads to inefficiencies that cascade through the project lifecycle – affecting delivery timelines, quality, and even customer trust.

Why is naming hard?

Let’s explore some key testing types where naming clarity is imperative.

End-to-End TestsWhile end-to-end tests are understood quite consistently, related tests such as functional, system, acceptance, and regression tend to overlap in their purpose, usage and scope. Misaligned definitions in these higher-level tests can cause confusion not just within engineering teams, but also with business stakeholders, regulatory bodies, and external partners.

Recommendation: Align on a single term such as end-to-end tests, early in your process based on what resonates with your team, and remain consistent with it. If you maintain a separate larger suite of regression tests across sprints, aim to converge them into one set of end-to-end tests. Note that end-to-end tests can be slow and flaky; refactor them to focus on happy paths and invest in lower-level tests described next.

While integration tests are a useful tactic in your test plan, these tests get mixed with API and Contract tests, leading to over-testing, and tool proliferation. Further, there is often lack of clarity in the level of module interactions; whether the lower level dependencies and data interactions are “mocked” (i.e. dummied out). Leaving this detail to developer discretion leads to inefficiencies and increased maintenance costs.

Recommendation: Tag your integration tests as broad (live system dependencies) or narrow (test doubles). This gives engineering teams clearer expectations and ensures predictable execution times. Feel free to use safer terms other than broad vs narrow based on our team’s preference. The general understanding should be broad integration tests need to be deployed, underlying data needs to be set up, their invocations affect system state, and they need to be run sequentially. On the other hand, narrow integration tests leverage “test doubles” instead of live services, they run more quickly, and they can be run in any order.

Unit TestsUnit testing is a key engineering practice to drive design (hence test driven development) and write clean code, with the resulting tests being a good side-effect. When done right, you create a fast feedback loop, enabling teams to ship software quickly and with confidence. However, many organizations don’t leverage unit tests properly to reduce costs upstream. Either unit tests are written post implementation, or existing unit tests are gamed to accept the code changes.

Recommendation: Having written software without and with TDD, I understand how hard it is to get it right, justify the business value and scale it in larger teams. Further, applying a norm for a greenfield project is far easier than inheriting a brownfield codebase and figuring out the process nuances. It’s essential to ensure teams are incentivized to prioritize unit tests and establish governance mechanisms to ensure their long-term value.

Shared understanding

A clear and consistent understanding of testing types and their purpose in your business context allows your teams to operate more efficiently, avoid duplication, and improve cross-functional collaboration. For senior leaders, this means better visibility into quality metrics, more predictable release cycles, and a better alignment between technology and business objectives. Sharing key tips from my journey:

- Create an internal glossary of testing terms: This ensures everyone knows what’s being discussed and avoids unnecessary back-and-forth over definitions.

- Leverage Lightweight Decision Records (LDRs): Formalize decisions on testing terminology, ensuring that changes are visible, stored and committed. I’ve described this technique in my previous posts.

- Monitor key quality metrics: Track indicators like lead time for changes, change failure rate, code coverage, and text execution time to ensure your test pyramid is optimized and delivering value for your investment.

Wrap-up

Establishing a shared vocabulary for testing is essential for teams to align effectively and for leadership to have visibility into quality metrics and delivery predictability. By focusing on clarity and consistency, organizations can minimize inefficiencies and set the foundation for fast, reliable software development.

Comments